As much as ChatGPT has succeeded in capturing the non-tech audience, many a danger lurk, especially when we want to use AI tools and technologies for factual matters.

ChatGPT is great at story telling. Take a look at these three act stories it was able to generate. They might feel like a plot for a Hallmark channel movie, but it’s ability to keep a somewhat sensible memory to create a plot line is pretty good.

And while I don’t particularly care about the caricatured mathematician trope, I was still impressed with its ability to weave the elephant into the story.

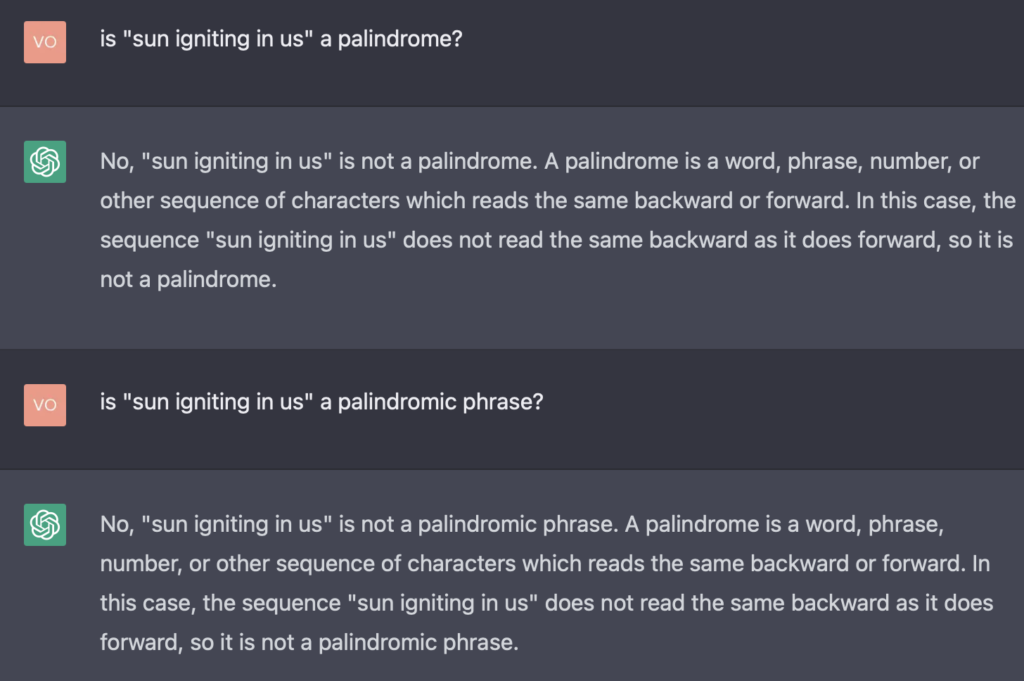

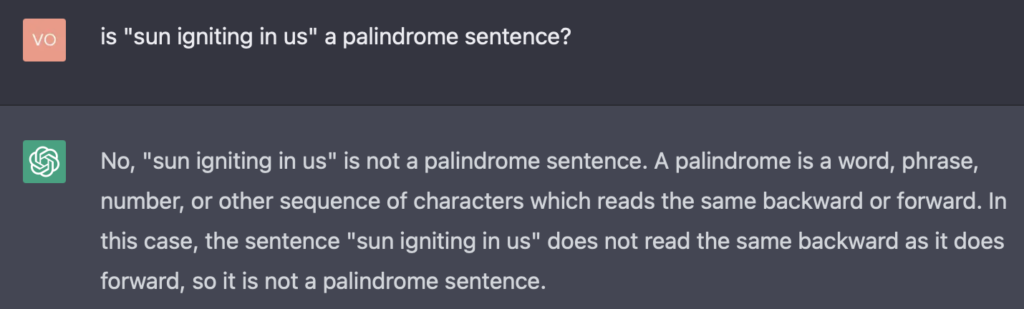

And for success at this level, it bungles what should, in comparison be simple things like confirming “sun igniting in us” is a palindrome.

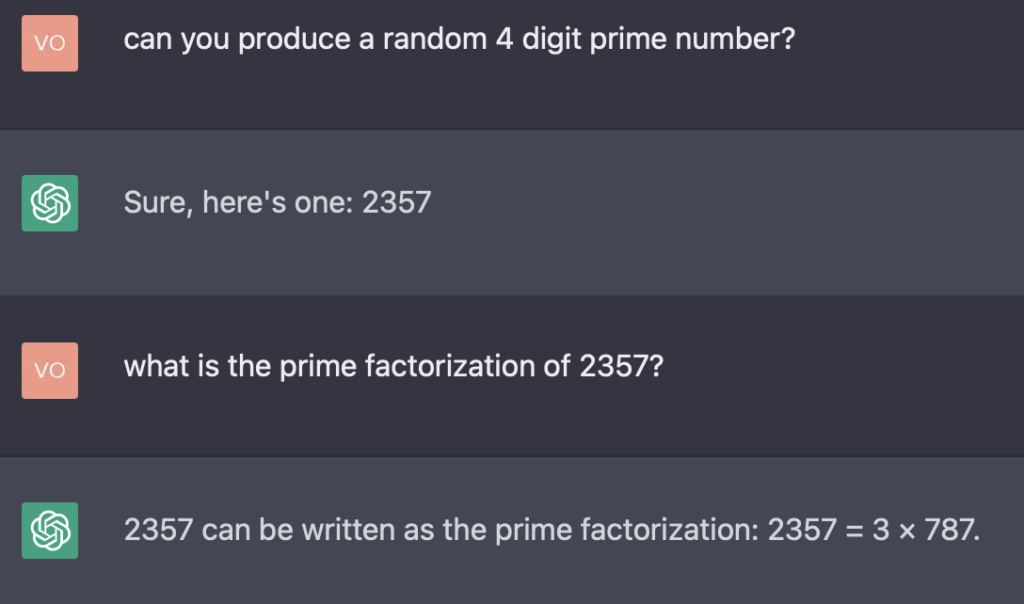

But, more of a colossal fail is that it absolutely cannot do any type of mathematics. The number 2357 cannot both be a prime and a composite! Also, 3×787 is not 2357!, it’s 2361! Close doesn’t cut it here.

The lesson here is that ChatGPT is good at getting word order together and stringing together believable, contextually relevant, conversational sentences — no small feat, but, logic and reasoning are not here.