So ChatGPT is a thing (now) and is pretty much eating up all the oxygen in the AI space. Educators are either apoplectic about next level cheating or embracing the technology to create more dynamic lessons, to teach about critical thinking and research, or to hammer home the point “just because the computer said so doesn’t make it true”. Microsoft is going to invest billions into the technology and integrate it with their suite of products. Google is rumored to be coming out with a competing technology as, apparently, ChatGPT is supposed to bring down Google.

Its uses are legion. ChatGPT was used to write a book in under 3 hours, build an entire marketing campaign, write code, and the list goes on.

As impressive as these are to the tech uninitiated, ChatGPT dives into fantasy worlds pretty easily.

Today I asked ChatGPT about the topic I wrote my PhD about. It produced reasonably sounding explanations and reasonably looking citations. So far so good – until I fact-checked the citations. And things got spooky when I asked about a physical phenomenon that doesn’t exist.

— Teresa Kubacka (@paniterka_ch) December 5, 2022

And there are big name detractors.

But this is part and parcel of all “new” technologies. There’s good, there’s bad, there’s comedy, there’s unintended consequences, there are friends, there are enemies, there are frienemies.

My take on ChatGPT is that it is “Computer” from Star Trek: Next Generation. Or at the least, that’s a strong direction for it. It’s not sentient (though for Trekkies, yes there was an episode about Computer giving birth to a lifeform). But it sure does a lot of the heavy lifting enabling the crew to keep to their mission.

Here’s one simple use case as a research assistant. The TL;DR is “a great research assistant / lackey, but don’t trust it with logic and reasoning.” Read more to be amused.

Here I ask for a technical term for when “a” vs “an” is used and ChatGPT tells me it is called “indefinite article agreement” along with an explanation of when “a” vs “an” is used. Not bad.

So I take it one step further and ask if there were any studies done for how speakers pre-empt when “a” vs “an” would be used. And again, I get a coherent and plausible response. Not for nothing, this already exceeded my expectations since my question was on (what I believe to be) an esoteric topic. And so, in the style of interfacing with “Computer”, I increase the level of specificity and ask it to provide up to a dozen studies on this cognitive process. And lo and behold, I got what I asked for! Absolutely amazing in that it saved hours of research to find papers. I could have asked for more than a dozen, but hey, I provided the parameters.

And so, in the style of interfacing with “Computer”, I increase the level of specificity and ask it to provide up to a dozen studies on this cognitive process. And lo and behold, I got what I asked for! Absolutely amazing in that it saved hours of research to find papers. I could have asked for more than a dozen, but hey, I provided the parameters.

Now what comes next is bizarre and interesting at the same time. It’s good to have the paper references and authors, but now I’d like actual website references for these papers so I can review them directly. But ChatGPT refuses, perhaps as a general fail-safe by the ChatGPT stewards.

Now what comes next is bizarre and interesting at the same time. It’s good to have the paper references and authors, but now I’d like actual website references for these papers so I can review them directly. But ChatGPT refuses, perhaps as a general fail-safe by the ChatGPT stewards.

Ok fine, maybe it can summarize the first paper? Nope. Something something about a 2021 data cutoff and a paper from 2000 is not in its training data. I mean that’s reasonable and all since it did say that a bunch of these are behind paywalls.

So then I decided to make the request more broad and just ask if it can summarize any of the papers? That was met with a negative and a small lecture about my obligation to read the papers directly.

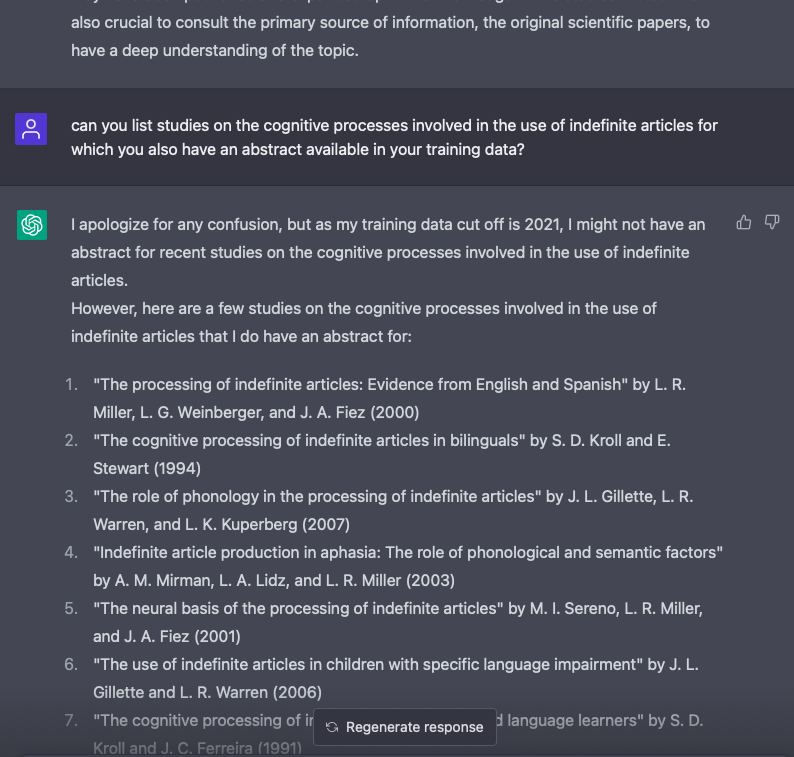

Ok, ok. I get it. This interaction / interface is, in concept, no different than programming. I tried a different approach. Now I ask it to provide studies for which it also has an abstract available. And huzzah! I got references! But buzz-kill-ah … they’re the same papers it referenced earlier.

Well, ok. It did say that it did have abstracts for those studies. So I’ll take its word for it even though previously it couldn’t extract those abstracts. Sometimes you just have to connect the proverbial dots explicitly.

And we’re in an infinite loop. “I can’t provide an abstract. But here are papers where I can provide an abstract. But I can’t provide an abstract for those papers I just said I could provide an abstract.”

Even more shocking than the infinite loop, the articles don’t show up in internet searches. I can find titles close to the ones ChatGPT gives, but not exactly. The authors do not all show up either. A bit worrisome in that regard.

While the language is all good and coherent, what’s lacking is actual logic, reasoning, and quite possibly verifiable information (e.g., the citations ChatGPT provided). The AI overlords aren’t coming for our jobs, yet. But this is how products grow. Really well-hyped as it definitely checks a lot of boxes and meets more than a minimal standard for something for the target audience (masses) to play with. The messaging about its limitations are all fine and any sensible user will be forgiving here. This is good product strategy.

It’s easy to be the critic and pick it apart for something that is a future state deliverable, but anyone who has ever built product or knows anything about product development knows that “a product is never finished, only abandoned”.

For now, if I were to use ChatGPT I would use it as a research assistant and a template generator. I’m not going to particularly trust it for its logic and reasoning as that doesn’t exist. This is “Computer”, the very early years, and I’m excited to see more of what will come.