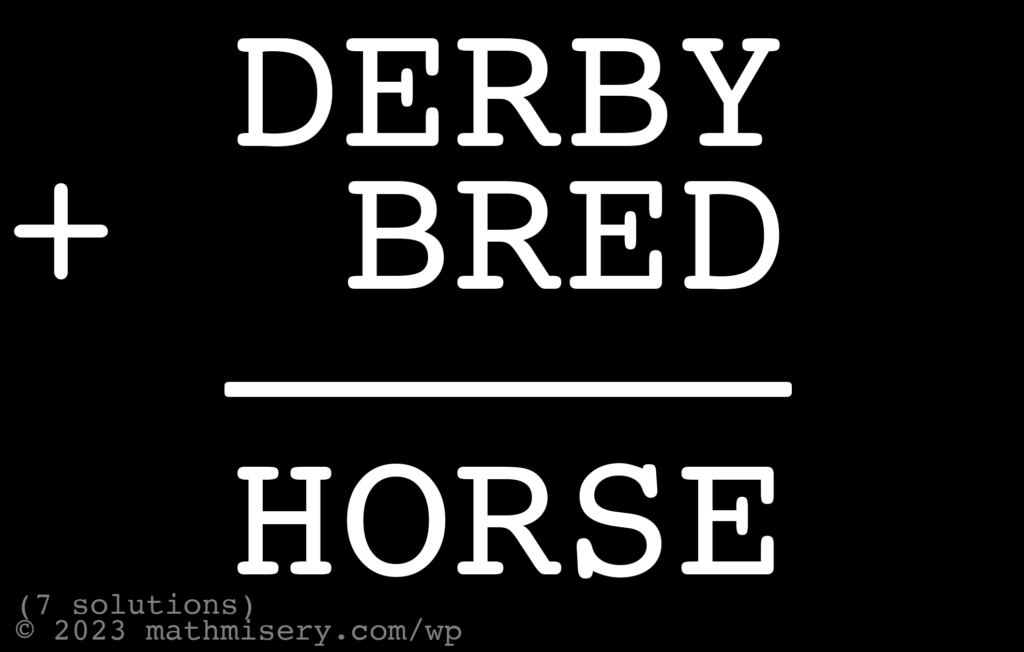

Palindrome puzzles are hard to make! So enjoy this one. There are several more in the queue. The palindrome here is “Derby bred” and the puzzle is DERBY + BRED = HORSE.

Solutions are below the image.

SPOILER SOLUTIONS!

| DERBY_first | BRED_second | HORSE_third |

|---|---|---|

| 16975 | 7961 | 24936 |

| 18957 | 5981 | 24938 |

| 18967 | 6981 | 25948 |

| 38925 | 2983 | 41908 |

| 57932 | 3975 | 61907 |

| 58923 | 2985 | 61908 |

| 68952 | 5986 | 74938 |