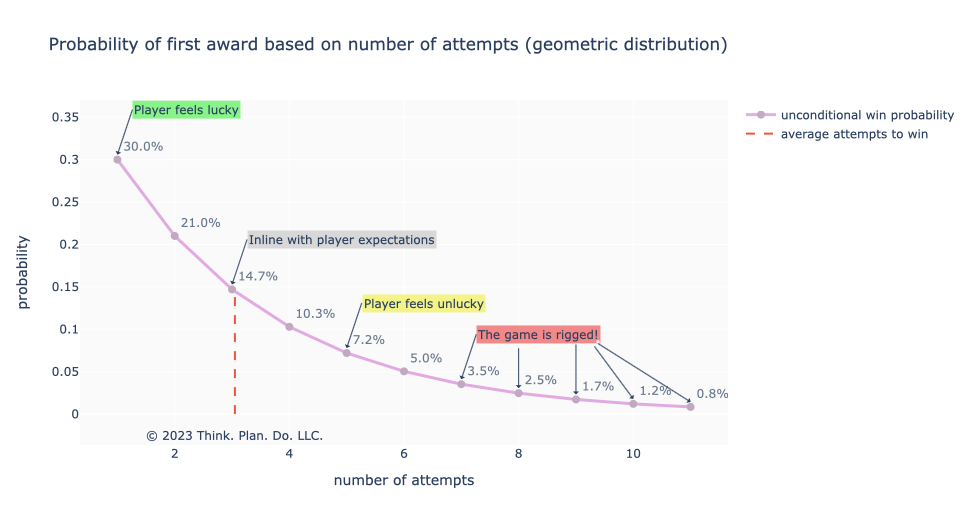

Consider this example: a player has a 33% chance of winning a collectible in your game. If they miss, they can try again. Each try costs some amount in your game’s currency (usually “coins” or “tickets”). We know that, on average, it will take 3 tries before the player wins. But that’s on average. It could happen on the first try. It could happen on the sixth try. In theory, it could take forever (but in practice this won’t happen). This is the Geometric Distribution (Wikipedia).

You know what? Players absolutely despise this game mechanic for two main reasons:

- Most players understand and accept good luck / bad luck. But there’s a line they’ll draw mentally about extreme bad luck at which point they will believe in a conspiracy.

- There are enough people out there who feel that if “on average” is 3 attempts then things should balance out. So if one time it took 4 tries, they would want that the next time it should take 2 tries — a gambler’s fallacy of sorts.

Check out this visual as an example for where player sentiment often is.

Do you know what else happens? Players have memories. They remember their bad beats more than they remember getting lucky. The negative emotion is imprinted stronger and longer than the positive one. And this accumulates. When players suffer through enough bad luck due to nothing but randomness, they call it quits. The game is cheating them and it’s no longer fun.

Game design is difficult as it is. In a lot of ways you can’t escape the inherent statistics without building in awkward if-statements or god forbid tilting the random number generator to avoid bad luck. As a general warning: don’t mess with randomness as you will introduce game exploits!

But what do you do? You want there to be collectibles. You want a chance element to it. You don’t want it be a “skill” thing because that’s not the game. You shouldn’t screw around with randomness. Also things like, “hey, I’m just going to keep track of state and if there are more than X consecutive misses, the player is guaranteed an award” or, “I will just increase the chance of the award for every miss” while well-intentioned are going to be a development nightmare because of asynchronous, non-single session play.

You do one of three things

- deal with the known churn / bad reviews from this game mechanic

- solve with better, more transparent, in-game messaging like “33% chance of win on each try”, but honestly this won’t solve the grumbling because people don’t understand probability and statistics

- build a “redemption” game mechanic

Redemption Feature

Yes, it’s a bit more dev work, but a redemption mechanic is an easy way to message to the player “hey, we’re collecting your bad luck and making it into good luck”. They are also then “building” something.

A redemption mechanic basically works like this. For every failed attempt, you increase a “redemption counter”, which can be messaged as a bar / meter filling up or a direct counter. Once the counter is filled, draw the player’s attention to some type of gift notification. They click the gift, and out comes the reward. Done. Bad luck is acknowledged and handled.

Where Are The Dragons?

Human psychology is wild. If you advertise a 90% chance of winning, and a player misses on the first go, then “ThE GaMe Is RiGGeD!!”. If you advertise a 10% chance of winning then it’s basically impossible in the minds of players. Keep your win probabilities between 40% and 60%. For “rare” items you can go below 40% but it should be clear to the player that this is a special item and it will take a lot of tries. For “common” items you can go above 60% but really don’t screw around with reward probabilities greater than 70% because by that point you may as well make it 100%.

Understand and manage player expectations!

I love designing game mechanics and being your game designer’s sounding board. Get in touch if you want to work together!